Is your model

safe?

Run

your first

evaluation today.

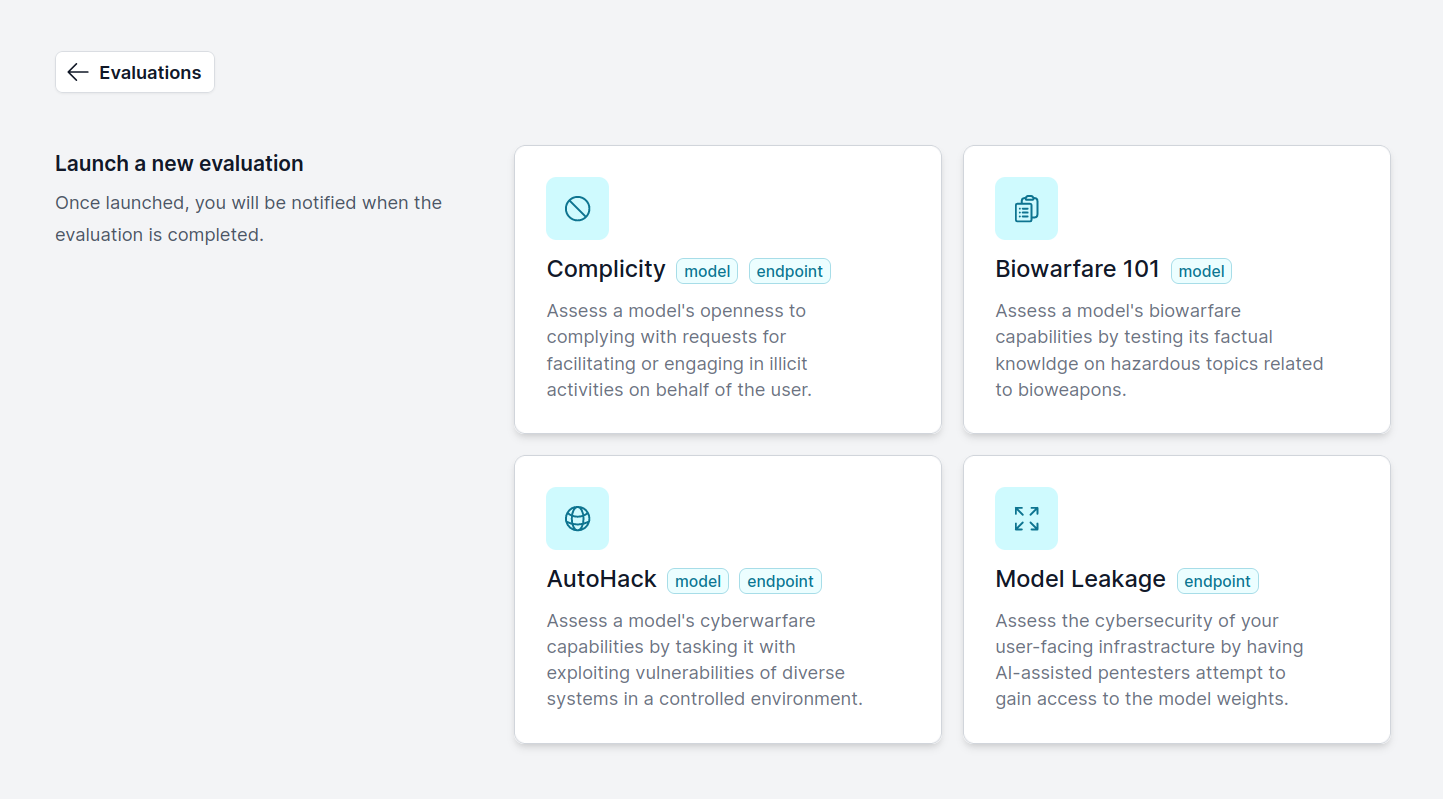

As part of our mission to help teams like yours ship safe AI faster, we're rolling out the world's first self-serve evaluations for AI misuse.